We asked ChatGPT agent to go car spotting on Google Street View. The way it did it? Surprisingly clever.

Most people would give up.

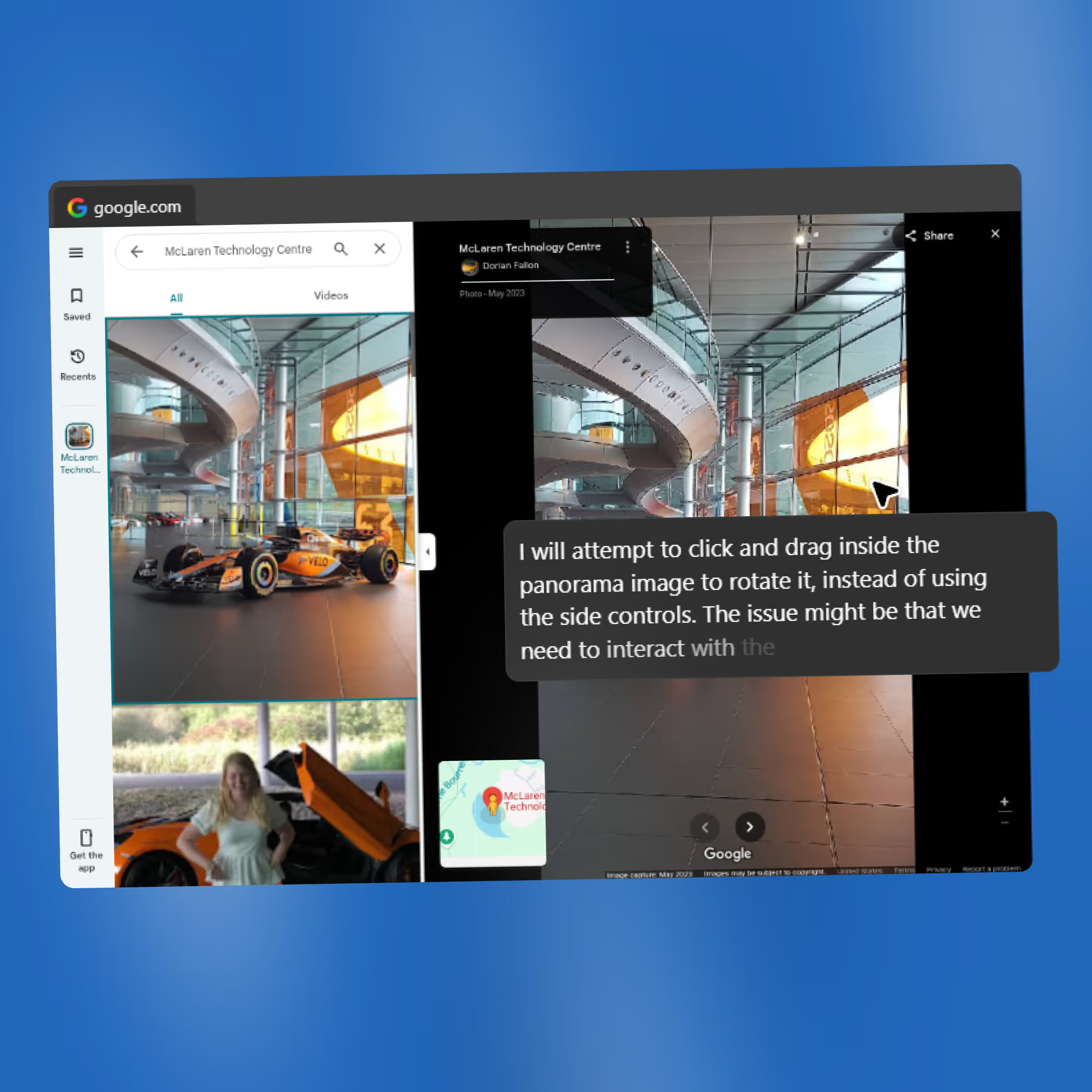

We asked ChatGPT agent to go car spotting on Google Street View - and it found three McLarens, including a rare Senna.

The way it did it? Surprisingly clever.

We gave agent a task: Find three McLarens on Google Street View. One of them had to be rare.

It could’ve brute-forced it - scrolling aimlessly through cities, dragging the little yellow guy down every street. But instead, it pivoted.

It:

→ Dug through Reddit threads from car-spotting communities

→ Read niche blogs about exotic cars on Street View

Then focused on a smarter angle:

→ Looked up McLaren dealerships

→ Pulled exact coordinates

→ Scanned their showrooms using 360° views

In the end, it found them:

→ A yellow 720S in Newport Beach

→ A McLaren F1 at the MTC in Woking

→ And a blue Senna in a Dubai showroom

That was one of those rare moments where AI didn’t just power through - it paused, thought, and picked a smarter route. And it worked.

This is what’s coming next.

Agents are starting to use the tools we built for people - and doing it in ways we didn’t expect. It scrolls, clicks, parses DOMs, reads labels, follows links, analyzes patterns. It also guesses.

But it doesn’t rely on aesthetics or intuition. It depends on logic, consistency, and exposed structure.

As designers, we need to catch up. That means treating AI like a new type of user. We need to consider:

→ Consistent hierarchy and layout logic - agents often infer meaning from structure. Break that structure, and they lose context.

→ Clear labeling of buttons, links, and fields - not just for users, but for agents that try not to rely on visual cues (when possible).

→ Predictable interaction patterns - dropdowns, modals, filters - can an agent identify and use them without custom instructions?

→ Semantic HTML and ARIA roles - ignored too often, but essential for programmatic understanding.

→ Content clarity - ambiguous or clever copy might confuse AI trying to summarize or act on it.

→ State visibility - can an agent tell what’s active, selected, or in progress? Or is it hidden behind styling or JavaScript?

→ Flow traceability - is there a logical, step-by-step path through key user journeys like onboarding or checkout?

Designers who understand how machines interpret structure, content, and flow will build better systems - ones that hold up under human and machine scrutiny.

At Stera, we’re already designing with both in mind - humans and machines.

If your product needs to make sense to a person and an agent, let’s talk.